Guide to spotting fake news ahead of the 2024 U.S. election

- With the 2024 U.S. Presidential Election approaching, misinformation, especially deepfakes, is a significant concern for voters.

- Types of fake news include fabricated stories, misleading content, imposter content, and manipulated content, all of which can distort reality and mislead voters.

- Deepfakes, which are AI-generated fake audio, video, or images, are created with the aim of manipulating public opinion.

- Advanced AI tools from organizations like TrueMedia help detect deepfakes.

- ExpressVPN, a leading VPN service provider, offers a guide to help users spot the telltale signs of deepfakes and fake election news.

With the 2024 U.S. Presidential Election fast approaching on November 5, voters are swimming in a sea of information. Amid this deluge, the rise of fake news, particularly AI-generated deepfakes, poses a serious threat to informed voting. Fake news can distort reality and mislead voters, making it important to know how to spot.

Deepfakes—those eerily convincing, manipulated audio and video clips—have taken misinformation to a whole new level. Advanced AI tools make creating these fakes easier and more widespread, blurring the lines between real and fabricated content designed to deceive and manipulate public opinion.

For example, recent studies reveal a significant number of fake social media accounts are actively spreading misinformation. These accounts aim to skew political narratives and influence voter opinions, emphasizing the need for vigilance and careful evaluation of the news we consume.

This guide will help you navigate the fake election news minefield. We’ll cover how deepfakes work, tips for spotting them and verifying sources, and tools to protect yourself from misinformation. By the end, you’ll be better prepared to make informed decisions based on accurate information.

Jump to…

What constitutes fake news?

The impact of fake news on elections

What’s being done to stop fake news circulating?

Combating fake election news with new tools

Tips for spotting fake political news

AI in elections: Threat or opportunity?

What constitutes fake news?

Fake news refers to false or misleading information presented as if it were true. It aims to deceive and manipulate readers, often for political, financial, or social gain. In the context of elections, fake news can distort reality and mislead voters, affecting their perceptions and decisions. Here’s a closer look at the different types of fake news:

- Fabricated stories: Completely invented articles designed to look like legitimate news. They often feature sensational headlines to attract attention and provoke strong emotional responses. For example, a made-up story about a candidate’s health scare could deter voters.

- Misleading content: Real information presented in a misleading way. This includes taking quotes out of context or selectively using statistics to create a false narrative. For instance, a real photo of a politician at an event could be used to falsely imply they support a controversial cause.

- Imposter content: Fake websites or social media profiles mimic reputable news sources, making it challenging to identify authenticity at a glance. These imposters can spread false stories that seem credible because they appear to come from trusted sources.

- Manipulated content: Real images, videos, or audio that have been altered to deceive. Examples include photoshopped images or edited videos that change the context or message.

Deepfakes: A new dimension of fake news

Deepfakes are a particularly insidious form of fake news. They use AI to create highly convincing but entirely fake audio, images, or videos. These deepfakes can easily deceive people, making it important to know how they work and to understand their potential impact.

How do deepfakes work?

Deepfakes are created using sophisticated AI techniques, specifically deep learning algorithms. These algorithms can analyze and learn from large datasets of images, audio, or video of a person. Once trained, the AI can generate new content that closely mimics the person’s appearance, voice, and mannerisms. Here’s a quick overview of the process:

Data collection: Gathering a large amount of visual and auditory data of the target individual from various sources, including videos, photos, and audio recordings.

Training the model: Using this data to train a deep learning model. The model learns to understand and replicate the target’s facial expressions, voice, and movements.

Content generation: The trained model can then create new, highly realistic images, videos, or audio clips of the target person doing or saying things they never actually did.

The technology has advanced to the point where these deepfakes can be almost indistinguishable from real content without expert analysis. For example, remember those AI-generated deepfake photos of Pope Francis in a large white puffer coat that fooled users online last year?

However, the dangers of deepfakes go beyond harmless trickery. They can be weaponized in various harmful ways. For instance, deepfakes have been used in revenge porn, where individuals’ faces are superimposed onto explicit content without the person’s consent, causing severe emotional and reputational damage. They can also be used to create fraudulent audio or video clips to deceive people in personal or business contexts, leading to financial loss or legal troubles.

The political arena is also a prime target for fake news and deepfake manipulation. Imagine a scenario where an election candidate is depicted making outrageous claims or engaging in inappropriate behavior. These fabricated clips can spread like wildfire, misguiding voters and tarnishing reputations, potentially swaying the election results.

The impact of fake news on elections

So, exactly how do these tactics impact elections?

Voter manipulation

Fake news often appeals to emotions rather than logic, using sensational headlines and fabricated stories to trigger strong reactions. By playing on emotions like fear, anger, or sympathy, fake news can bypass rational thinking, leading to quick, uninformed decisions. Exaggerated claims and provocative language grab attention and provoke strong responses, making fake stories more likely to be shared widely.

Moreover, confirmation bias can significantly contribute to the spread of fake news. People tend to favor information that aligns with their preexisting beliefs, making it easier for fake news to reinforce these views. This bias means that individuals are more likely to accept and share information that confirms their existing opinions, even if it’s false.

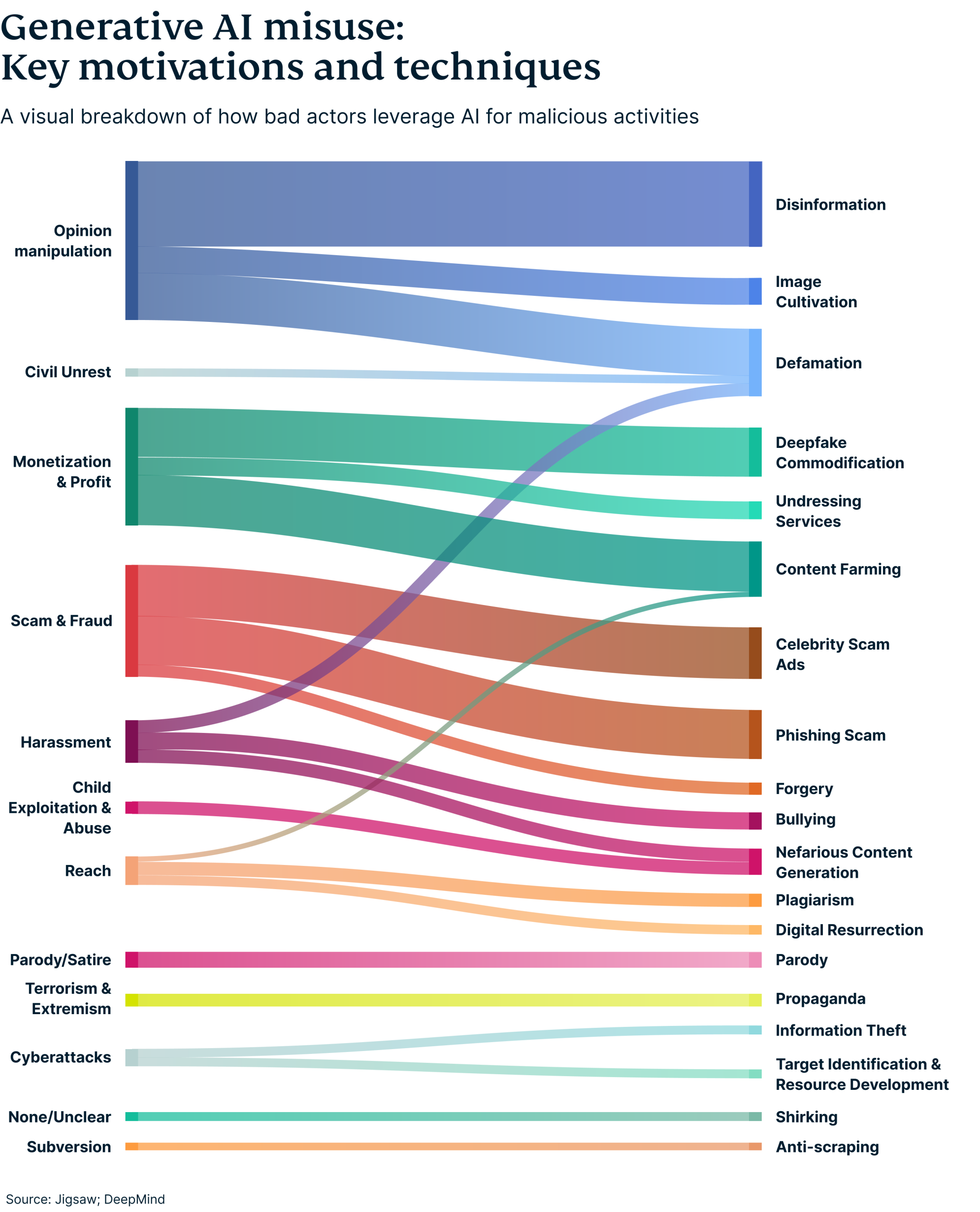

A recent Google DeepMind study found that swaying public opinion is the most common malicious goal of using generative AI, primarily through deepfake media. This creation of realistic but fake images, videos, and audio of people was almost twice as common as the next highest misuse of generative AI tools, which was the creation of text-based misinformation online content.

Erosion of trust

Constant exposure to fake news can erode trust in legitimate news sources and institutions. As voters struggle to tell real news from fake, their confidence in the electoral process and its outcomes can wane, leading to increased skepticism and disengagement. When voters can’t trust the information they receive, they become more cynical about the media and the democratic process.

A recent global survey by Ipsos for UNESCO found that 85% of people are worried about the impact of online disinformation, and 87% believe it has already harmed their country’s politics. This widespread concern directly impacts trust because when people are constantly confronted with conflicting information, they begin to question the credibility of all news sources, not just the fake ones.

The sophisticated nature of deepfakes, which can appear almost indistinguishable from genuine content without advanced tools, makes this issue even worse. The more realistic and widespread these fakes become, the harder it is for the public to trust what they see and hear.

Polarization

Misinformation often targets specific groups, deepening divisions and polarizing society. Spreading false or misleading narratives exacerbates tensions and creates a more fragmented and contentious political environment. Tailored misinformation can reinforce preexisting biases, leading different groups to have vastly different perceptions of reality, based on the misinformation they consume.

These groups are more susceptible to believing and sharing fake news, which deepens ideological divides and makes it harder for different groups to find common ground.

The Shorenstein Center on Media, Politics, and Public Policy reports that while 75% of U.S. adults can distinguish real political news from fake, a significant minority still gets duped. This issue is more pronounced among older generations and younger people with less education, compared to those with a bachelor’s degree or higher. This susceptibility deepens ideological divides and makes it harder for different groups to find common ground.

Social media algorithms further exacerbate this polarization by creating echo chambers that reinforce existing beliefs. This leads to more entrenched views and a wider divide between different political or social groups.

Impact on candidates

Deepfakes and fake news can also unfairly damage the reputations of political candidates. False videos or statements attributed to them can spread quickly, creating lasting negative impressions that are hard to counteract, even with factual rebuttals. A recent Cyabra analysis found that many accounts engaging in political discussions on platforms like X (formerly Twitter) are fake. For example, the study discovered that 15% of accounts praising Donald Trump and 7% of those praising Joe Biden weren’t real. These fake profiles are designed to manipulate public opinion and amplify specific narratives.

Voter turnout

The prevalence of fake news can also impact voter turnout. When voters are flooded with conflicting and misleading information, it can lead to confusion and apathy. Some may decide not to vote at all, believing their vote won’t make a difference in what seems like a corrupted process.

What’s being done to stop deepfakes from circulating?

In the leadup to the 2024 U.S. Presidential Election, fake news and deepfakes are surging. NewsGuard has reported a staggering 1,000% increase in websites hosting AI-created false articles. Meanwhile, the World Economic Forum highlights a 900% annual growth in deepfakes driven by generative AI. With misinformation escalating at such an unprecedented rate, and its potential to distort election outcomes, the pressing question is: what’s being done to combat this threat?

Federal legislation and initiatives

The Federal Trade Commission (FTC) is working on new laws aimed at controlling the production and spread of AI-generated deepfakes. These regulations focus on preventing AI-enabled impersonation and protecting both individuals and organizations from election-related misinformation.

Meanwhile, the Federal Communications Commission (FCC) addresses broadcast news distortion through its existing policies. However, these regulations are limited to traditional TV news and don't cover cable news, social media, or online platforms. Action can only be taken if there is substantial evidence of intentional falsification, balancing enforcement with First Amendment rights.

State-level actions

States are also taking the initiative. California, for example, has laws prohibiting the distribution of malicious deepfakes within 60 days of an election to minimize their impact. Similarly, Texas has made it illegal to create and share deepfake videos aimed at influencing elections.

Global and collaborative efforts

The fight against fake news is a global challenge. International efforts like the World Economic Forum's Digital Trust Initiative and the Global Coalition for Digital Safety are working to establish guidelines for AI and digital content. These initiatives involve collaboration between governments, industry leaders, and civil society to promote responsible AI use and develop tools for detecting and countering fake content.

The role of technology companies

Tech companies are also taking proactive measures. Platforms like Hugging Face and GitHub are implementing controls to limit access to tools that can create harmful content, promote ethical use through licenses, and incorporate provenance data to track the origin and alterations of digital content.

Combating deepfakes and fake election news with software

In addition to legal efforts, the fight against fake news is ramping up with advanced tools and collaborative initiatives. These technological solutions are becoming key for detecting and preventing the spread of misinformation, especially as deepfake videos and other sophisticated fakes become more prevalent.

One leading force in this battle is TrueMedia, a nonprofit founded by AI expert Dr. Oren Etzioni. The organization has developed state-of-the-art tools—currently in beta—designed to detect deepfakes. Users can input links from platforms like TikTok, YouTube, and Facebook, and the tools will evaluate the likelihood that the content has been manipulated. With over 90% accuracy, these tools can flag fake videos, images, and audio clips, helping to prevent the spread of misinformation.

For instance, TrueMedia.org’s tool successfully identified a deepfake video falsely depicting a Ukrainian official claiming responsibility for a terrorist attack in Moscow. This showcases its capability to catch even the most convincing fakes.

Other notable tools and initiatives

Various organizations are also developing innovative solutions to tackle misinformation:

- Reality Defender: Offers real-time deepfake detection technology that integrates with major social media platforms to quickly flag and remove manipulated content. Their scalable system handles the vast amounts of data processed by these platforms daily.

- ClaimBuster: An AI tool that checks facts in real-time, especially useful during debates or speeches. It quickly pinpoints potentially misleading statements for further verification.

- Full Fact: Uses AI to speed up fact-checking, focusing on the most significant misinformation. It aids journalists and researchers by prioritizing critical issues for accuracy.

- Sensity AI: Focuses on detecting both visual and audio deepfakes, partnering with social media platforms to ensure rapid identification and response to fake content. Their advanced machine learning algorithms identify subtle inconsistencies in media that indicate manipulation.

- MediaBias/FactCheck (MBFC): Evaluates the bias and factual accuracy of media sources, helping users understand the reliability of different news outlets and encouraging a more informed approach to consuming news.

Note: These tools are listed as examples and do not constitute endorsements from ExpressVPN.

15 tips for spotting fake political news

Legal measures and advanced tools are essential, but your vigilance is the first line of defense against fake news. With misinformation on the rise, here are 15 practical tips to help you spot fake political news and ensure your decisions are based on accurate information.

1. Maintain a critical mindset

Approach all news with a healthy dose of skepticism. Question the authenticity of the information before accepting it as true. Ask yourself: Who is the source? What is their agenda? Is the information consistent with other credible sources?

2. Check the source

Go on to verify the credibility of that source. Established news organizations with a reputation to uphold are less likely to publish false information. Research the outlet’s background and reputation.

3. Look for verification symbols

Social media platforms like X use verification symbols (like blue checkmarks) to indicate legitimate accounts. While not foolproof, these badges can help you determine the authenticity of the content.

4. Cross-reference with reputable outlets

If you come across a surprising or sensational news story, cross-reference it with reports from reputable news outlets. Reliable organizations like the Associated Press, Reuters, and BBC News are known for their fact-checking and comprehensive coverage. Consistency across multiple reputable sources adds credibility to the story.

5. Investigate the author

Check the credentials of the article’s author. Look for a byline and a bio. Reputable journalists often have a history of credible reporting. If the author’s information is missing or seems dubious, be cautious about the content.

6. Examine the URL carefully

Fake news websites often use URLs that mimic legitimate news organizations but with slight alterations (e.g., “.co” instead of “.com”). Always double-check the URL to ensure it matches the official website of the news source.

7. Analyze the quality of writing

Poor grammar, spelling errors, and sensationalist language are red flags. Reputable news sources maintain high editorial standards and typically avoid using excessively emotional or provocative language. Professional writing is usually a sign of credible reporting.

8. Scrutinize visual content

Images and videos can be powerful tools for spreading misinformation. Use reverse image search tools like Google Images or TinEye to verify the origin of photos. For videos, platforms like InVID and Fake News Debunker can help you analyze and verify content.

9. Look for watermarks and metadata

Trusted news outlets often include watermarks on their original content. If an image or video lacks a watermark or contains a suspicious one, it might be manipulated. Additionally, check the metadata of digital content if possible, as it can provide information about the file’s origin and modifications.

10. Assess the date

Fake news can sometimes involve real events but present them out of context by using old news as if it were current. Always check the publication date of the article to ensure that the information is still relevant and accurate.

11. Look for fact-checking labels

Many reputable organizations, like Snopes, FactCheck.org, and PolitiFact, actively debunk false claims. Check these sites if you encounter dubious news. Some social media platforms also flag content that has been disputed or fact-checked.

12. Be skeptical of outrageous claims

If a news story seems too shocking or outrageous to be true, it probably is. Fake news often relies on sensationalism to capture attention. Approach such stories with a healthy dose of skepticism and verify them through multiple reputable sources.

13. Understand confirmation bias

Be aware of your own biases. People tend to believe information that aligns with their existing beliefs. Make a conscious effort to seek out and consider information from a variety of perspectives, especially those that challenge your views.

14. Utilize browser extensions

Install browser extensions like NewsGuard or Fake News Detector. These tools provide ratings and analysis of news websites, helping you quickly identify sources that are reliable or potentially deceptive.

15. Gain media literacy

Understanding how the media works can help you better evaluate news stories. Familiarize yourself with techniques like clickbait, emotional appeal, and framing, which are often used in misleading content.

AI in elections: Threat or opportunity?

As we equip ourselves to discern fake news, we must also grapple with the broader implications of AI in our elections. While AI helps detect misinformation, it also significantly contributes to its creation. This dual nature of AI raises important questions about its place in our democratic processes.

Used ethically, AI has the potential to revolutionize elections in several positive ways. It can enhance voter engagement by providing personalized, relevant messaging that addresses the specific concerns of different communities. This not only helps campaigns connect more effectively with voters but also has the potential to increase voter turnout and civic engagement.

Moreover, AI can make political campaigns more accessible and inclusive. Technologies like real-time translation and speech recognition can help break down language barriers and improve accessibility for voters with disabilities. This promotes a more informed electorate, allowing more people to engage with political content in ways that suit their needs and ensuring that decisions at the polls are based on a clear understanding of candidates’ policies and platforms.

However, we must approach the integration of AI in elections with caution. The potential for misuse—whether through the creation of deepfakes, targeted disinformation campaigns, or other manipulative practices—remains a significant concern. Ensuring that AI is used ethically in political campaigns requires strong regulatory frameworks, transparent practices, and vigilant oversight by tech companies and watchdog organizations.

Educating voters about the capabilities and limitations of AI in political campaigns can also help mitigate the risks of misinformation. An electorate that understands the potential and pitfalls of AI is better equipped to critically evaluate the information they encounter, fostering a healthier democratic process.

So, can AI and elections ever mix? The answer is nuanced. AI offers unique tools that could enrich political discourse and deepen democratic engagement—if used responsibly. Without proper safeguards, the technology could just as easily be weaponized. Balancing innovation with caution and leveraging AI as both a shield against misinformation and a tool for positive engagement might be the key to integrating AI into the electoral process in a way that strengthens democracy rather than undermines it.

Have you ever come across fake election news? What gave it away? Sound off in the comments below.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN